Tonight, after a horrible drive I returned home to a cold flat, my central heating had broken. I had no heat and no hot water.

Fortunately I'm a bit of a DIY nut, a few quick checks on the boiler, it had electric, I tried switching it off and on again, still nothing. Turning on the hot tap, the boiler made a noise, life! - but no heat. I checked the pressure gauge, low, very low but still green and no warning lights. I was all out of ideas until I decided to try and put some more pressure in the system. The pressure gauge rose and then the boiler fired up – yay, heat, lovely heat and hot water a bonus!

So how many (be honest now) readers would check the pressure and put more water in the system? I'm guessing very few, and that's fair enough, personally I'd have been pissed if I'd spent the night in the cold and then an emergency visit from the boiler repair person resulted in a 2 minute fix to add some pressure. Wouldn't it be better if you could point your computer at the boiler, have it return an error code which linked to a page on the manufactures website, with suggestions on how to fix the error.

This hasn't been the only time I've returned home to a problem. A few weeks ago I returned home to find the fridge door open. Everything was fine, but I was lucky. Now how many people have returned home to a flood? Or some other situation that you would like to have known about earlier on.

I have a dream, a crazy one, and one that's very unlikely going to happen. I've been thinking about many of these things for a while now, so maybe, just maybe if I tell someone it will come true, failing that we might just take a step closer, so here goes...

Every appliance in the house should have a network connection. There. Simple hey!

Now I know it's added cost, and manufacturing is kept as low as possible, but I would buy the next model up if it had a good network port and wasn't a blatant rip-off for what it was like so often happens.

So what should be networked?

- Central heating? Yes

- Microwave? Yes

- Cooker? Yes

- Fridge? Yes

- Freezer? Yes

- Washing machine? Yes

- Tuble dryer? Yes

- Light switch? Yes (I'll explain latter).

- Air con? Yes.

- TV? Yes

- HiFi? Yes

- Satellite receiver? Yes

- Wheelie Bin? Well, maybe a stand for it, waste by weight? Sneaky neighbour using your bin?

- Any more? Yes – everything (except the kettle and kitchen sink)

- heck, maybe even the kettle and kitchen sink (how much water do you use to wash up?)

I'm a big fan of home automation, you've probably figured that out from my dream, but the market sector is a horrible mess and the consumer devices are generally cheap (yet expensive) and naff.

I've got lots of X-10 devices laying around the flat that are no longer user (a very disappointing and expensive hobby in the UK).

Now I've got some Home-Easy devices kicking around, and for the most they work well, but the range is disappointingly limited. A few simple changes and Id be over the moon, but for now, it's still naff.

So what should this network connection onto these devices do?

I'd love to see two things, first and most importantly reporting. Lets have some reasonable sensors wired into the network, then secondly control of the devices as an added extra where possible.

A few examples:

- Reporting - internal water pressure/flow, water supply pressure/flow, gas pressure/flow, flame temperature, last on, last off, send and return temperatures for radiator system, system age, firmware version and importantly error code.

- Control – on/off times if settable. Manual override of on/off.

- Reporting – internal temperature, door status, light bulb status(1), alarms, last on, last off, power usage.

- Control – operating temperature, alarm hi and lo points.

- Reporting – wash progress, efficiency (load/cost), age.

- Control – On time, Off time. I have a Home-Easy device on a timer so my washing machine will start automatically in the morning and shut off late at night.

Now a lot of these values are currently measured one way or the other but not reported, and some are not measured, such as power usage. But something like the fridges temperature are so core to the operating of that device it would be hard to find one without it.

How about control, well a lot of device still use mechanical systems, and maybe they should stay that way, although I wonder if it might not be cheaper and easier to provide the control through the network port and some solid state electronics.

Now I'm not suggesting every device implements a full blown web site with Ajax styled web pages and all that, that's a recipe for disaster! (2), instead what I would want to see is a REST based API interface, you point something at the device, it returns a list of capabilities and endpoints that you can connect to and query the devices sensor values.

Why REST, well it's very simple, just hit the endpoint, maybe with a parameter or two and it returns you an lump of xml, fantastic for automation, and if you want to make a nice UI to go with it, no problem – the device manufacturers could then have a skin-able application that the consumer runs on their PC or Mac (even if it's just javascript downloaded from the devices web server) and you've got a nice UI where you can provide much better feedback.

Where does this lead us, well you end up being able to monitor what's happening in your home, make changes based on the results, prevent systems failing, or catch them earlier – wouldn't it have been better for me to find out my fridge was open as I was leaving the house, or that the heating had failed at lunchtime when I could have been able to get it seen to in the afternoon? How about a warning when I go to bed that I've left the oven on or that the front door is unlocked?

Lets talk more about the fridge because theirs two other aspects that are interesting. What happens when the power fails? The fridge and freezer start warming up, and with no power (perhaps a blown fuse), chances are theirs no way for the device to warn you until it's too late.

Well, the network port can provide a low level of power, enough to ensure the monitor and reporting system can be kept alive. Currently corporations everywhere are rolling out Power-Over-Ethernet for use by VOIP phones, how about consumers, well POE devices are coming onto the market, many of us have decent broadband, so how long before we want a nice desk phone connected to the internet giving us free calls?

So lets leverage that, a POE network switch, combined with a small UPS to keep the power going to your fridge, freezer the little magic box that monitors them, and your broadband router, so you can get a SMS message or email when something goes wrong and the devices get to keep power when it's out, and get to tell you theirs a problem.

If were going to implement Power-Over-Ethernet we can even go a step further. This is where the light switches come in. Lets do away with the old light switch, it's got problems, pull out that twin and earth cable feeding it and drop in a Cat 5 cable, attached to a POE switch at one end and the other, to a new lighting switch, that has a motion sensor, a light level sensor, a microphone and possibly speaker, and to top it off it's a touch screen like your iPhone.

Where does that take us? We get much better control over the lighting, the ability to control the lights in another room (left the downstairs light on after going to bed?) The ability to automatically switch off lights in rooms when the daylight is enough, or theirs no activity in the room, and maybe even that star trek intercom system where we can page another room (kitchen to bedroom page for the kids anyone?)

I have a Home-Easy sensor in the kitchen and some small cabinet lights hooked up to a Home-Easy power switch, when I go in the kitchen the lights go on, their usually enough for my needs, when I leave the lights go off after about a minute. With the X-10 set-up I was able to turn any other lights in the kitchen off as well, but I can't do that (3) with the Home-Easy set-up.

Did I mention the idea of a UPS on the POE system to maintain power? Well lets push that a step further as well. All the rage now days are LED lighting, low power bulbs with long lives. What happens if it's dark and the power fails? People fall over, light candles and set fire to the house? How about using LED lighting, either the odd lamp or having a nice arrangement of ceiling lamps like we see with the halogen ones now days. And how about if they were networked as well. When the power fails one or two of these lamps could be driven in low power mode from the POE switch, the back-lights on the light switches can come on to help the occupants find the door, the sensors can figure out of theirs anyone in the room and save emergency power by switching off the lights in that room.

How about if theirs a fire? You can get smoke alarms that send out RF signals, you could monitor that and in the event of a fire you could switch on lights to help the occupants find their way out. Maybe the light switches can determine what rooms have people in, or excess heat to help out the fire brigade.

So I've diverged from the fridge haven't I. How many times have we heard about the fridge that figures your out of milk and orders it for you, we see photo's of the fridge with a LCD monitor built in – now that's silly as them things get hot, it adds a lot of expense and will no doubt provide a poor user experience because hardware people just don't get software (4)

You know, it would be great if out food had RFID tags and the fridge and freezer could work out what's in them, oh, and the bin could also (5), but really those devices should just make this information available via the network port so we can get it from where ever we happen to be parked with out laptop, or iPhone, perhaps even in the supermarket, query your fridge to see what's missing whilst in Tesco's anyone?

If theirs ever a time to start pulling all this together it's now, the technologies there, the green movement is on us to save energy, electricity suppliers are going the way of smart meters so we can take advantage of cheaper electricity, or if were not careful, use more expensive electricity, broadband adoption is huge, and people are embracing the web more and more.

Infact some of this is already happing, you can DIY your own home sensor network with ioBridge however that's more for techy geeks like me and I believe it still needs a server, I want to see that stuff build into appliances so all you have to do is connect up a network cable.

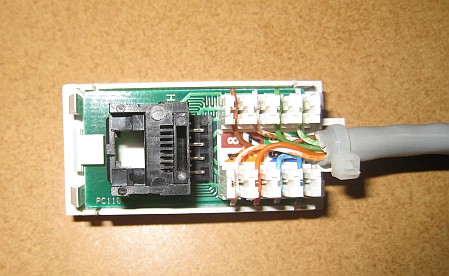

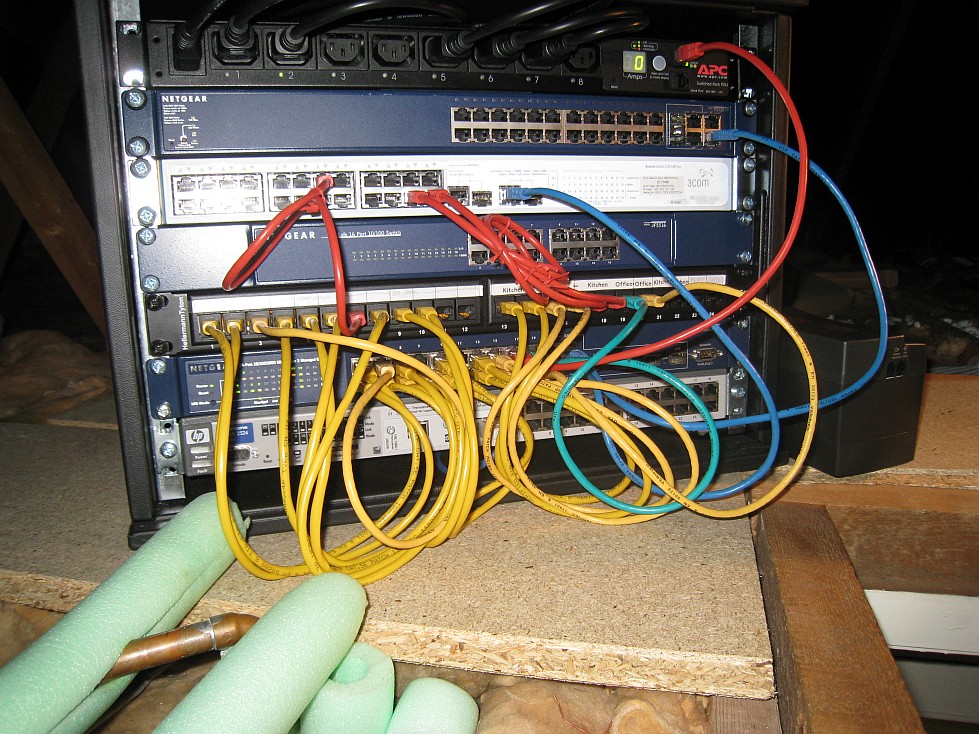

Which brings me onto another issue, the network cabling, not everyone's like me and got 8 network outlets in the kitchen Cat 5 cable is really easy to install, it's a lot less dangerous than mains cable and it's fairly cheep, the only difficult bit is making a nice connection at either end, and that's just a case of punching 8 colour coded wires into the 8 colour coded terminals on a patch panel or outlet.

I'm not the first to wire up my pad for networking, and I sure won't be the last, Scott Hanselman has a great series of blog posts Wiring the new house for a Home Network althought I think it should be said both our set-ups are perhaps a little OTT (I could make do with 1 patch panel, 1 switch and 1 UPS, that would remove 5 of the devices in the rack!, but then I'd have nothing to play with).

Scott's set-up is quite large, personally I've got a small 19 inch case in the loft which is descreet and easy to manage, but only does the cat 5 network cables. Now not everybody wants to have network cables so you could argue, that like my Topfield 6000 PVR it should be wireless. If you've ever tried setting up a Wi-Fi network on a device other than a PC you will know it's a hideous job, best bet is to have a simple network connector on the fridge/freezer etc., have it default to DHCP to get it's IP address and then use a Netgear wireless link to do the wireless bit. Putting a little LCD screen on the fridge just because you want Wi-Fi is going to make the costs even worse.

Hopefully in the future part of the first fit of a house will include dropping some network wiring into each room, TVs now come fitted with Ethernet network connectors and hopefully more and more appliances will, so hopefully new build houses will improve on that.

Why do I think this is never going to happen? Well theirs a few reasons:

- The general aim of manufacturing an appliance is to keep costs as low as possible, throwing in sensors and a network port add to that, and the cost of that extra stuff can easily be multiplied by 4 by the time the various middlemen take their cut.

- Hardware manufactures generally don't get software, and putting high tech stuff into a low tech fridge is probably something the manufacture is not that confident at doing.

- Software support , hardware people are generally rubbish at software, which means that if we do get the network port it's likely to be a poorly implemented website rather like the one offered on my toppy.

- Does anyone other than me and a few random geeks actually want this stuff? Now the basics maybe, and I'm sure a few people wished they could get an alert when the freezer is defrosting so they could save the food, but the overhead of networking up the appliances? Too much maybe. Perhaps we need a simple short range wireless set-up rather than ethernet?

Anyway, there you have it, my crazy idea for a tech filled house.

What am I doing to move along that route and why am I sharing this dream?

Well I've got a little Arm micro controller running .net micro framework and a network adaptor for it, plans include the light switch I talked about, light control using LED lamps and networked temperature monitors. Oh, and somehow I want a device to track what's in the fridge and what gets put in the rubbish bin for a new website I'm working on.

Making a hardware platform to sell is really tough and expensive, so theirs no hope of me doing that, so sharing my ideas hopefully will help those that can and do.

How about you? What do you see in the future house? What tech would make your life better.

-

Finally I will be able to see if the light stays on when the fridge door is closed!

-

My Topfield satellite receiver has a web server with nice little web pages in, but it's limited to what you can do with those pages and little automation is possible via that interface.

-

I have a small Arduino project in progress to fix that issue.

-

And that's one of the main reasons I say we won't see this, because the hardware manufacturers don't get software, and when they think they do the result is usually poor the only good combination I've seen around is Apple.

-

One of my wishes for ThreeTomatoes.co.uk is to detect what's going in the bin.