Like me you've probably heard various voices on the internet speaking of the importance of A/B testing. I've listened and agreed and tried a few bits here and there, but I've never seen such an obvious result as with my latest Adwords.

You may have noticed I recently launched RememberTheBin.com, a reminder service so you don't forget to put the bin out. Initially I set up a Google Adwords campaign with one advert and that didn't get much interest at all so I added a second very similar ad.

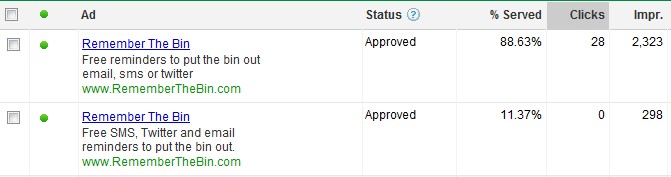

Here are my two ads:

Initially these ads were getting served equally, fortunately Google has kicked in and realised it's not making money from the second one so stopped serving it so often.

The “Free SMS, Twitter and email reminders to put the bin out” ad was my first shot and am I glad I decided to do an A/B test on it. Talk about a useless ad. No clicks what so ever, zero, nothing, nada, zilcho and that's over about a month!

It's interesting to note how similar the text of the two ad's are and how different the responses are, they both have the same keywords, cost and even the same words!

My question to you is this: Are you running AdWords, or even SEO, or specific landing pages? Have you tried AB testing? If not, go, go now and try! I'll wait for you...

Which brings me nicely onto the SEO AB testing. That's a lot more difficult and time consuming because you want the search engines to update their index with what you want them to see. Instead invest some cash in Google AdWords and play with the AB testing through that and see what people click on, what gets served more, then use that information in your SEO campaign.